Computer audio sound cards are the norm at nearly all radio stations. I often wonder, am I using the best audio quality sound card? There are some trade-offs on the quality vs. cost curve. At the expensive end of the curve, one can spend a lot of money on an excellent sound card. The question is, is it worth it? The laws of diminishing returns state: No. High-quality reproduction audio can be obtained for a reasonable price. The one possible exception to that rule would be production studios, especially where music mix-downs occur.

I would establish the basic requirement for a professional sound card is balanced audio in and out, either analog, digital, or preferably, both. Almost all sound cards work on PCI bus architecture, some are available with PCMCIA (laptop) or USB. For permanent installations, an internal PCI bus card is preferred.

Keeping an apples: apples comparison, this comparison it limited to PCI bus, stereo input/output, and analog and digital balanced audio units for general use. Manufacturers of these cards often have other units with a higher number of input/output combinations if that is desired. There are several cards to choose from:

The first and preferred general all-around sound card that I use is the Digigram VX222HR series. This is a mid-price range PCI card, running about $525.00 per copy.

These are the cards preferred by BE Audiovault, ENCO, and others. I have found them to be easy to install with copious documentation and driver downloads available online. The VX series cards are available in 2, 4, 8, or 12 input/output configurations. The HR suffix stands for “High Resolution,” which indicates a 192 KHz sample rate. This card is capable of generating baseband composite audio, including RDS and subcarriers, with a program like Breakaway Broadcast.

Quick Specs:

- 2/2 balanced analog and digital AES/EBU I/Os

- A comprehensive set of drivers: driver for the Digigram SDK, as well as low-latency WDM DirectSound, ASIO, and Wave drivers

- 32-bit/66 MHz PCI Master mode, PCI and PCI-X compatible interface

- 24-bit/192 kHz converters

- LTC input and inter-board Sync

- Windows 2003 server, 2008 server, Seven, Eight, Vista, XP (32 and 64 bit), ALSA (Linux)

- Hardware SRC on AES input and separate AES sync input (available on special request)

Next is the Lynx L22-PCI. This card comes with a rudimentary 16-channel mixer program. I have found them to be durable and slightly more flexible than the Digigram cards. They run about $670.00 each. Again, capable of a 192 KHz sample rate on the analog input/outputs. Like Digigram, Lynx has several other sound cards with multiple inputs/outputs which are appropriate for broadcast applications.

Specifications:

- 200kHz sample rate / 100kHz analog bandwidth (Supported with all drivers)

- Two 24-bit balanced analog inputs and outputs

- +4dBu or -10dBV line levels selectable per channel pair

- 24-bit AES3 or S/PDIF I/O with full status and subcode support

- Sample rate conversion on digital input

- Non-audio digital I/O support for Dolby Digital® and HDCD

- 32-channel / 32-bit digital mixer with 16 sub outputs

- Multiple dither algorithms per channel

- Word, 256 Word, 13.5MHz or 27MHz clock sync

- The extremely low-jitter tunable sample clock generator

- Dedicated clock frequency diagnostic hardware

- Multiple-board audio data routing and sync

- Two LStream™ ports support 8 additional I/O channels each

- Compatible with LStream modules for ADAT and AES/EBU standards

- Zero-wait state, 16-channel, scatter-gather DMA engine

- Windows 2000/XP/XPx64/Seven/Eight/Vista/Vistax64: MME, ASIO 2.0, WDM, DirectSound, Direct Kernel Streaming and GSIF

- Macintosh OSX: CoreAudio (10.4)

- Linux, FreeBSD: OSS

- RoHS Compliant

- Optional LStream Expansion Module LS-ADAT: provides sixteen-channel 24-bit ADAT optical I/O (Internal)

- Optional LStream Expansion Module LS-AES: provides eight-channel 24-bit/96kHz AES/EBU or S/PDIF digital I/O (Internal)

Audio Science makes several different sound cards, which are used in BSI and others in automation systems. These cards run about $675 each.

Specifications:

- 6 stereo streams of playback into 2 stereo outputs

- 4 stereo streams of record from 2 stereo inputs

- PCM format with sample rates to 192kHz

- Balanced stereo analog I/O with levels to +24dBu

- 24bit ADC and DAC with 110dB DNR and 0.0015% THD+N

- SoundGuard™ transient voltage suppression on all I/O

- Short length PCI format (6.6 inches/168mm)

- Up to 4 cards in one system

- Windows 2000, XP and Linux software drivers available.

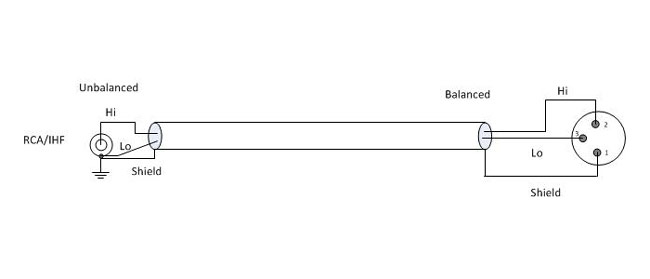

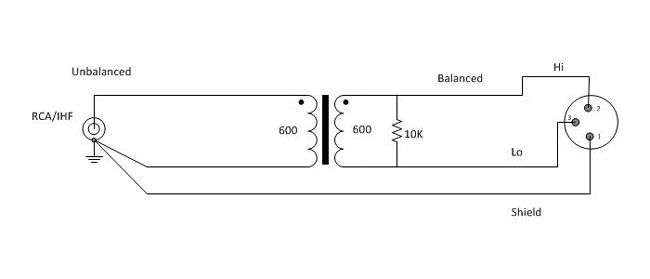

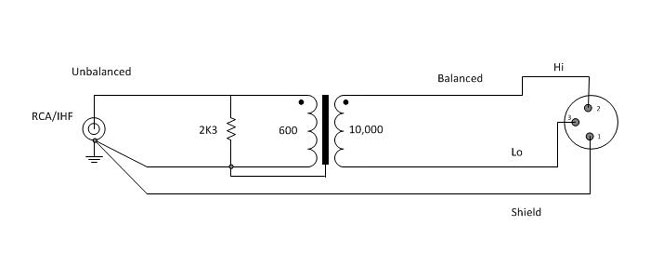

There are several other cards and card manufactures which do not use balanced audio. These cards can be used with caution, but it is not recommended in high RF environments like transmitter sites or studios located at transmitter sites. Appropriate measures for converting audio from balanced to unbalanced must be observed.

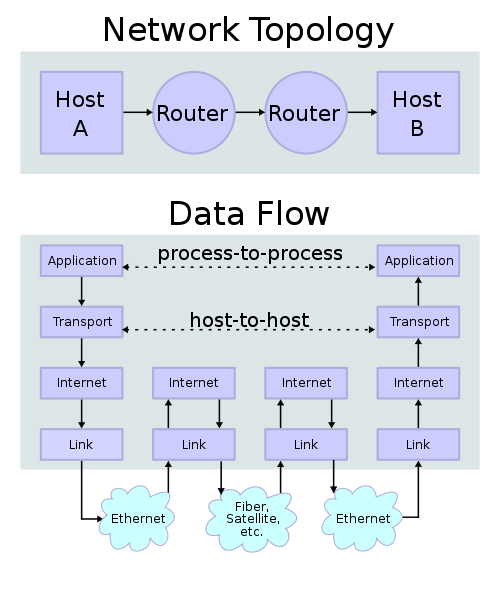

Further, there are many ethersound systems coming into the product pipeline which convert audio directly to TCP/IP for routing over an ethernet 802.x based network. These systems are coming down in price and are being looked at more favorably by broadcast groups. This is the future of broadcast audio.