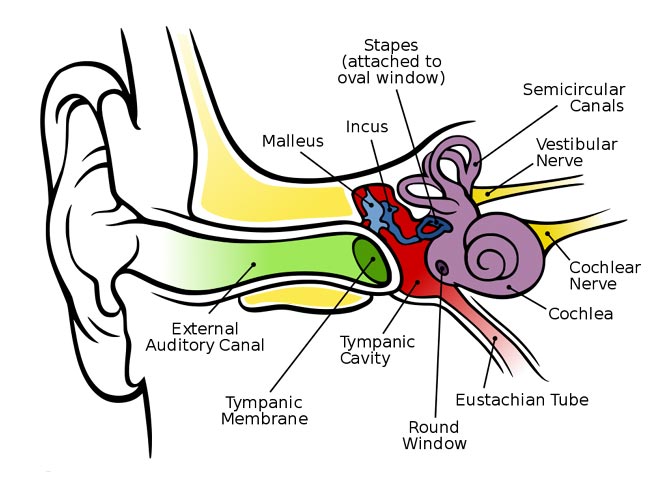

I give you, The Human Ear:

All of the programming elements, all of the engineering equipment and practices, all of the creative process, the music, the talk, the commercials, everything that goes out over the air should reach as many ears as possible. That is the business of radio. The quality of the sound and the listening experience is often lost in the process.

Unfortunately, a large segment of the population has been conditioned to accept the relatively low quality of .mp3 and other digital files delivered via computers and smartphones. There is some hope however; when exposed to good-sounding audio, most people respond favorably, or are in fact, amazed that music can sound that good.

There are few fundamentals as important as sounding good. Buying the latest Frank Foti creation and hitting preset #10 is all well and good, but what is it that you are really doing?

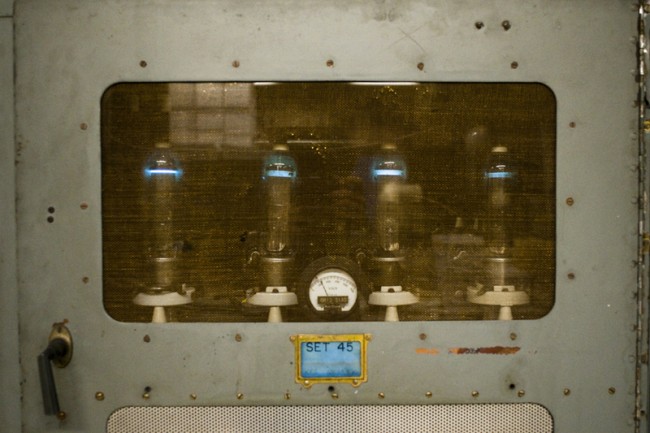

There was a time when the FCC required a full audio proof every year. That meant dragging the audio test equipment out and running a full sweep of tones through the entire transmission system, usually late at night. It was a great pain, however, it was also a good exercise in basic physics. Understanding pre-emphasis and de-emphasis curves, how an STL system can add distortion and overshoot, how clean (distortion-wise) the output of the console is, how clean the transmitter modulator is, how to correct for base frequency tilt and high-frequency ringing, all of those are basic tenants of broadcast engineering. Mostly today, those things are taken for granted or ignored.

Every ear is different and responds to sound slightly differently. The frequencies and SPLs given here are averages, some people have hearing that can go far above or below average, however, they are an anomaly.

Understanding audio is a good start. Audio is also known as sound pressure waves. A speaker system generates areas or waves of lower and high pressure in the atmosphere. The size of these waves depends on the frequency of vibration and the energy behind the vibrations. Like radio, audio travels in a wave outward from its source, decreasing in density as a function of the area covered. It is a logarithmic decay.

The human ear is optimized for hearing in the mid-range band around 3 KHz, slightly higher for women and lower for men. This is because the ear canal is a 1/4 wavelength resonant at those frequencies. Mid range is most associated with the human voice and the perceived loudness of program material.

Bass frequencies contain a lot of energy due to the longer wavelengths. This energy is often transmitted into structural members without adding too much to the listening experience due to a sharp roll-off starting around 100 Hz. Too much base energy in radio programming can sap loudness by reducing the midrange and high-frequency energy from the modulated product.

High frequencies offer directivity, as in left right stereo separation. Too much high frequency sounds shrill and can adversely affect female listeners, as they are more sensitive to high-end audio because of smaller ear canals and tympanic membranes.

Processing programming material is a highly subjective matter. I am a minimalist, I think that too much processing is self-defeating. I have listened to a few radio stations that have given me a headache after 10 minutes or so. Overly processed audio sounds splashy, contrived, and fake with unnatural sounds and separation. A good idea is to understand each station’s processing goals. A hip-hop or CHR station obviously is looking for something different than a classical music station.

For the non-engineer, there are three main effects of processing; equalization, compression (AKA gain reduction), and expansion. Then there are other things like phase rotation, pre-emphasis or de-emphasis, limiting, clipping, and harmonics.

EQ is a matter of taste, although it can be used to overcome some non-uniformity in STL paths. Compression is a way to bring up quiet passages and increase sound density or loudness. Multi-band compression is all the rage, it allows each of the four bands to react differently to program material, which can really make things sound differently than they were recorded. Miss-adjusting a multi-band compressor can make audio really sound bad. Compression is dictated not only by the amount of gain reduction but also by the ratio, attack, and release times. Limiting is relative to compression, but acts only on the highest peaks. A certain amount of limiting is good as it acts to keep programming levels constant. Clipping is a last resort method for keeping errant peaks from affecting modulation levels. Expansion is often used on microphones and is a poor substitute for a well built quiet studio. Expansion often adds swishing effects to microphones.

I may break down the effects of compression and EQ in a separate post. The effects of odd and even order audio harmonics could easily fill a book.

I remember the patch panels that patched around certain audio processors when doing proofs at certain stations. At WISN, this was never done when I was there. There was great pride in striving for “perfection in transmission quality”. A flat frequency response curve was the golden rule along with the lowest harmonic distortion possible. And when the PDM rigs arrived, other annoying factors came into being; audio intermodulation distortion, and RF harmonics outside of FCC Rules. These problems were eventually ironed out, but around this time the FCC seemed to not care much about doing the right things. The stupid 125% positive peak rule for AM I believe was the beginning of AM decline. NRSC didn’t do much to enhance transmission quality either. Then the processor wars and the Gates “Audio Enhancer” on a few transmitters. More distortion, and today HD radio on AM should really mean “Huge Distortion” to the once plain A3 emission.